OpenStack

is an opensource framework for datacenter IaaS (infrastructure as a service). Openstack framework contains a suite of software that together works as a cloud operating system for data center resources automation. You can find the list of ongoing projects under OpenStack here. Cloud OS Openstack combined with network OS, (so-called SDN controller) like ONOS can pose a serious proposition for an end to end infrastructure automation. In this three-part tutorial, we will build Openstack based on Newton release.

The above diagram shows various Openstack services and their subsystems. In this tutorial we will go through following core services:

Keystone can be considered as Microsoft Active Directory which is

responsible for authentication and authorization for user as well as

service API calls.

Glance provides guest OS image library and catalogue service.

Nova is the compute resource manager. Nova talks with hypervisor and

responsible for VM creation, deletion, etc.

Neutron is the network resource manager. It provide all TCP/IP services

from L2 (switching) to L7 (loadbalancing, firewalling, IDS, etc).

Following optional service as well:

Horizon is the GUI interface of the controller node. Similary functionality can

be achive through rich Openstack CLI.

Openstack services communicate with each other over API, hence each service needs API endpoints. Subsystems within each service use AMQP (Advanced Message Queuing Protocol). AMQP message bus has several implementations like ActiveMQ, RabbitMQ, ZeroMQ, Apache Qpid, etc. In this tutorial, we will use RabbitMQ.

KVM

or Kernel-based Virtual Machine is a Linux kernel module (aka driver) which is specific to CPU architecture. i.e. KVM requires the CPU virtualization feature. For Intel, such a feature is known as VT-X, which you need to enable on the BIOS setting of the host machine.

KVM is a CPU driver, not a hypervisor. Openstack uses opensource QEMU (Quick Emulator). More precisely, Openstack uses QEMU through libvert utility. QEMU is a type-2 hypervisor, which means it needs to translate instruction between vCPU and physical CPU, which has a performance impact. KVM makes qemu (aka, qemu-kvm) a type-1 hypervisor. KVM allows all vCPU instructions to be directly executed on a physical CPU.

OVS

When running on a single host VMs often require network connections among themselves as well as with external networks. This connectivity can be achieved through the virtual switches like Linux Bridge, Open vSwitch (OVS), vRouter (from OpenContrail). Linux Bridge is the simplest and the oldest one, but it has a performance penalty for large scale deployment. OVS, on the other hand, is the most popular choice for several reasons. OVS can be configured to use DPDK poll-mode driver which significantly improve the performance. The OVN enhancement allows consolidation of all L2, L3, and security-group functions under OVS, instead of the isolated neutron agents.

OVS supports OpenFlow and OVSDB protocols for forwarding plane programming and management respectively. But, as I know during this writing Openstack neutron cannot use either of them directly. Openstack needs a network OS like OpenDaylight for SDN control over OVS.

Let’s Get Started

As you see from the following diagram the setup is pretty simple. I’ve used two Intel NUC for this setup. Since NUC has only one NIC, the configurations in the next two sections imply a Self-service network scenario, not a Provider network.

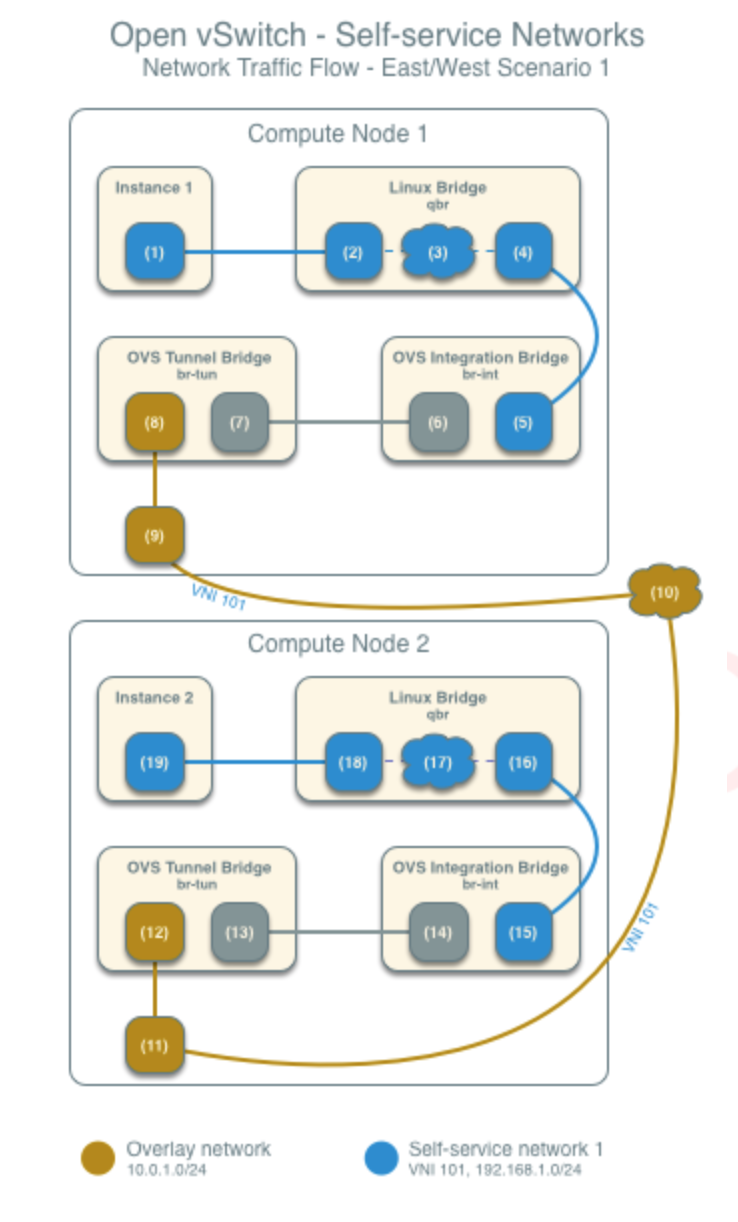

In our case, traffic between VMs on two physical hosts will follow the path as shown below: